Georgia researchers have designed this AI-powered backpack for the visually impaired

"For the past few years, I’ve been teaching robots how to see things" but meanwhile, "there are people who cannot see things and need help."

For people who are visually impaired, one of the biggest challenges is navigating public spaces.

Several tech solutions have emerged in recent years, vying to make the situation more manageable, such as smart glasses to identify everyday objects and connected canes that tell people when they’re approaching a curb.

Among the latest iterations of next-generation assistive accessories is a backpack powered by Intel’s artificial intelligence software. It’s designed to audibly alert wearers when they’re approaching possibly hazardous situations like crosswalks or strangers.

The backpack, which has yet to be named, was revealed last month but could face years of development before a consumer-ready version is launched. Still, the product offers a glimpse at what a future could look like as progress in AI and machine learning increasingly help people with vision issues better perceive their environments and, therefore, live more independently.

The backpack was created by researchers at the University of Georgia, who took existing computer vision techniques and combined them into a system that seeks to replace the need for a cane or guide dog.

Irony was really the driving force behind the idea, according to Jagadish K. Mahendran, the lead researcher at the University of Georgia who specializes in computer vision for robots.

“I met with my visually impaired friend, and she was describing problems to me that she faces daily. And I was struck: For the past few years, I’ve been teaching robots how to see things while there are people who cannot see things and need help,” Mahendran said.

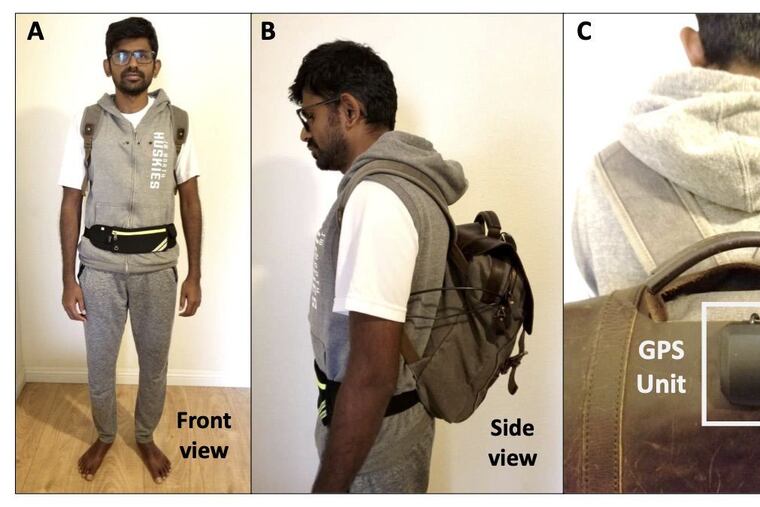

There’s nothing outwardly special about the backpack’s design: It looks like an ordinary gray knapsack with a small computer, such as a laptop, inside. A matchbox-sized GPS unit is affixed to the outside.

In a demonstration video, the user also wears a vest with tiny holes to conceal an embedded AI camera. When connected to the computer, the 4K camera captures depth and color information used to help people avoid things like hanging branches. The camera can also be embedded in a fanny pack or other waist-worn pouches.

The spatial camera, built by the computer vision company Luxonis, can read signs, detect crosswalks, and see coming changes in elevation.

Bluetooth earphones allow the user to communicate with the system and vice versa. So the wearer can ask out loud for location information, and the system will tell them where they are. If the camera spots a threat like an incoming pedestrian, it can tell the wearer.

It’s too soon to know how much such a device would cost consumers, but several start-ups and organizations are working to solve the same issues, and the tech doesn’t come cheap.

WeWALK’s smart cane with obstacle detection sells for $600, 10 times as much as an ordinary white cane. OrCam MyEye Pro, a wireless smart camera that reads what’s in front of you, runs $4,250.

Researchers at the University of Georgia went with a backpack design because it would help visually impaired people avoid unwanted attention. They used Intel’s Movidius computing chip because it was small and powerful enough to run advanced AI functions with low latency.

The next step is to raise funds and expand testing. They hope to one day unleash an open-source, AI-based, visual-assistance system. The researchers have formed a team called Mira, made up of some visually impaired volunteers.

“We want this solution to be inclusive and as transparent as possible,” Mahendran said. “Our main motto is to increase the involvement of visually impaired people in their daily activities and reduce their dependency on others.”