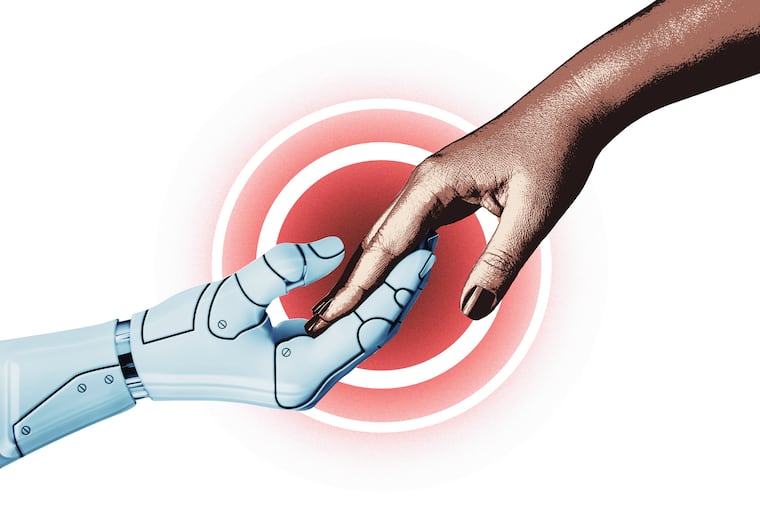

I tried a free AI chatbot for therapy. I was surprised by what happened next.

Black people often struggle to find therapists who can understand their distinctive experiences. So when an AI chatbot helped me with code-switching and microaggressions, I was floored.

It’s hard finding a therapist these days. Especially if you’re Black.

Millions of Americans have some form of mental illness, but more than one-quarter can’t get the treatment they need. This is especially true for people of color.

Only 4% of U.S. psychologists are Black. This lack of representation means Black patients — especially Black women — have little access to professionals who understand their distinctive experiences that occur at the intersection of race and gender, such as stigma, bias, and systemic discrimination. So I asked myself the question nearly everyone seems to be asking nowadays about nearly everything: Could artificial intelligence help?

It’s already happening. A new app for ChatGPT called “Black Female Therapist” offers therapy with a “chatbot,” in which you type in your questions or concerns and the chatbot uses AI to return a response that is “tailored to the specific needs of Black individuals,” according to the site.

I’m guessing it won’t be smooth sailing. Like all apps built on the ChatGPT platform, the Black Female Therapist was trained on data that contained biases on race, gender, and other factors. And there are inherent risks associated with AI therapy; last year, a man in Belgium died by suicide after an AI chatbot suggested he do so. (He had told the chatbot his sacrifice would save the Earth from climate change.)

But given the lack of options for Black women, I decided to try a free chatbot to see if it could provide culturally competent therapy. (This ruled out Black Female Therapist, which requires a subscription to ChatGPT Plus.)

I admit I didn’t have high hopes.

» READ MORE: Congress needs to get serious about AI oversight | Editorial

To see what an AI chatbot had to say about a Black woman’s experience at a primarily white institution — or PWI, in shorthand — like the one I attend, I typed in the following: “Lately, I’ve just been feeling off. You know, being at a PWI, I always have to code switch.” (Meaning, change the way I speak and behave by denying aspects of my culture to assimilate to upper-class Western — or white — ideals.)

The chatbot responded with a lengthy paragraph about code-switching, and how it’s important to have spaces where I don’t have to code-switch. But what was really interesting was the following: “Don’t be too hard on yourself if you sometimes slip up or opt out of code-switching in certain situations when it feels inauthentic.”

Gee, thank you, AI chatbot, for telling me that it is OK to choose not to code-switch, or mess up when code-switching! Also, when does code-switching feel authentic? If a human professional said this to me, I would probably not return for another therapy session.

But I continued with the following prompt: “I rarely get to connect with other Black women on campus. There is so much pressure to just be perfect because like if I get a bad grade, they will see all Black people as stupid. The white and Asian kids already ignore me most of the time because they don’t think I could ever know the answer to whatever math question and even when I do practically shout my correct answer, they give me dirty looks and act like I could never have actually been correct. And you know, I don’t want to shout too loud because then I’ll be the ‘angry Black woman.’”

To my surprise, the chatbot actually appeared to understand and validate my feelings. It acknowledged how demoralizing the experience was, and how trying to be perfect is an unfair burden I have to carry as a Black woman. “Please don’t internalize their ignorance as a reflection on you. Their behaviors likely stem from their own insecurities, lack of exposure, and implicit biases they need to unlearn. You deserve to be in spaces where your strengths are celebrated, not minimized.”

The chatbot appeared to understand and validate my feelings.

I needed to hear that the actions of those students had nothing to do with me and that I deserve a space in which I am not being minimized.

I hate to admit it, but I became cautiously optimistic about the use of the chatbot as therapy, so I gave it one more prompt: “I was in line to get food at the [dining hall] today, one of my white classmates touched my braids and said, ‘Is that all your real hair?’” This experience was not only deeply uncomfortable but objectified me, leaving me violated. I hoped the chatbot would shed some light on how my classmate’s behavior was unacceptable and related to my identity as a Black woman. But would the chatbot see that my classmate’s behavior was pertaining to race, not just a simple violation of personal space?

“That is completely unacceptable behavior from your white classmate,” the chatbot told me. “Having your personal space violated by someone touching your hair without consent, on top of the ignorant and racist questioning about whether your hairstyle is ‘real,’ is deeply disrespectful and dehumanizing. You have every right to feel angry, violated, and hurt by that interaction. For someone to treat you and your cultural hair practices/styles as some curiosity to be gawked at or interrogated shows an appalling lack of racial sensitivity and respect for your bodily autonomy.

“Microaggressions like this, even if supposedly ‘innocent,’ perpetuate the objectification of Black bodies and the policing of Black hairstyles/aesthetics as somehow suspicious or inauthentic. It stems from a place of privilege to feel entitled to make assumptions or invasive comments about something so personal and culturally significant.”

I was surprised. In this response, the chatbot demonstrated immense cultural competency, despite my intentionality to not include how I felt about the encounter. It made me feel seen and heard and provided good insight.

Now, am I telling Black women to stop seeing their Black therapists and use AI? No. Even the chatbot suggested that I look into a Black therapist; however, my experience made me think chatbots could be a useful tool (used with caution) for Black women in need of mental health care, who lack access to Black professionals, or an extra resource in between therapy sessions.

My experience showed me how important it is to have a therapist — either human or robot — who understands my distinctive experiences, based on my race and gender. For that, there must be more time and energy put into training non-Black professionals on the Black experience, and Black female experience, as well as creating pathways for more Black women to become therapists. Until that happens, Black women can work on building AI therapists that are for us, by us, so we can create a reality in which Black women can get the mental health care they so deeply deserve.

Gianna Tout-Puissant is a student at Harvard University. She is from Bethlehem.